The Sound

Classification Challenge

AudioSet is the ImageNet of audio: 2M+ clips across 632 sound classes. Understanding how models learn to hear is the foundation of audio AI.

AudioSet Stats

The Multi-Label Classification Challenge

Unlike image classification where each image has one label, audio clips often contain multiple simultaneous sounds. A 10-second clip might have "Speech", "Music", and "Traffic" all at once.

Single-Label vs Multi-Label

ImageNet (Single-Label)

Each image has exactly one correct label. Use softmax + cross-entropy.

AudioSet (Multi-Label)

Each clip can have multiple labels. Use sigmoid + binary cross-entropy.

Why mAP (mean Average Precision)?

For multi-label problems, we need metrics that handle varying thresholds and class imbalance:

For each class, rank all clips by confidence and compute the area under the precision-recall curve.

Average AP across all 527 evaluation classes. Treats rare and common sounds equally.

Area under ROC curve, averaged across classes. Often reported alongside mAP.

AudioSet Ontology

AudioSet organizes 632 classes into a hierarchical ontology with 7 top-level categories:

Human sounds

42 classesAnimal

75 classesMusic

137 classesSounds of things

263 classesNatural sounds

31 classesSource-ambiguous

14 classesModel Architectures

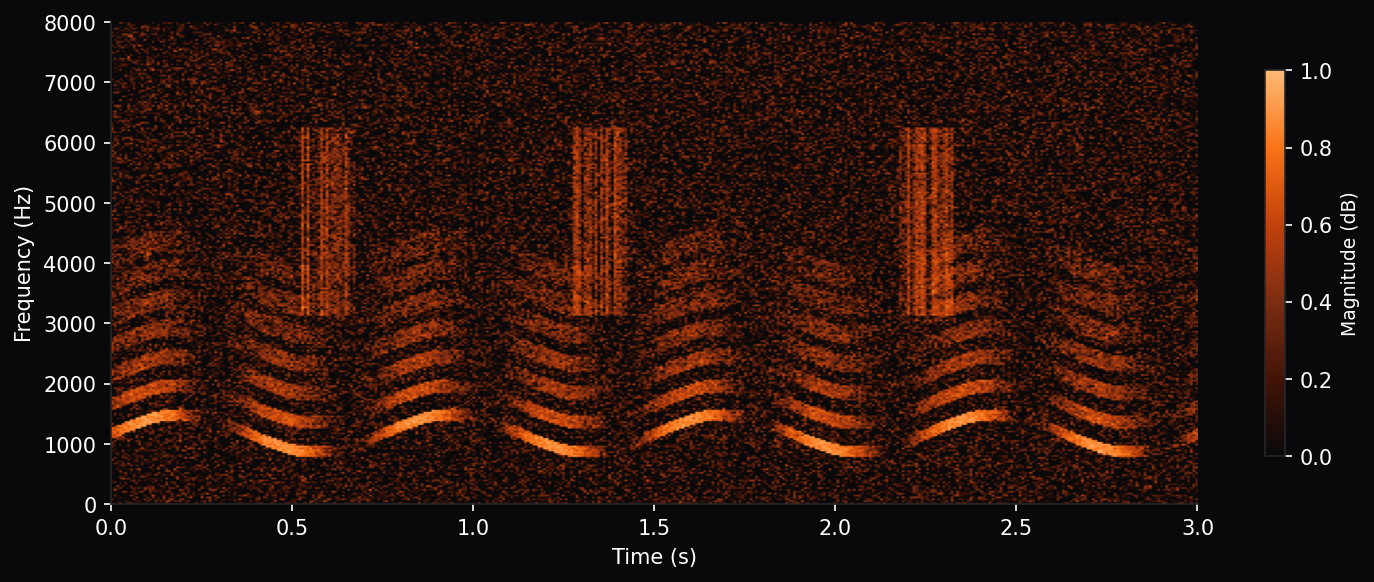

CNN-Based (PANNs)

Convolutional layers extract local frequency-time patterns. Attention pooling aggregates across time.

Vision Transformer (AST)

Treats spectrogram as image. Patch embeddings + self-attention captures global dependencies.

Audio Tokenizer (BEATs)

Learns discrete audio tokens via iterative SSL. Self-distillation from continuous to discrete.

2020: CNN Era (PANNs)

Kong et al. establish strong CNN baselines with attention pooling. mAP: 0.431

2021: Transformer Revolution (AST)

Gong et al. apply ViT to audio, transfer from ImageNet. mAP: 0.485 (+0.054)

2022: Efficient Transformers (HTS-AT)

Chen et al. use Swin architecture for better efficiency. mAP: 0.471

2023: Self-Supervised (BEATs)

Chen et al. iterate SSL with discrete tokenization. mAP: 0.498 (+0.013)

AudioSet Leaderboard

Detailed comparison on AudioSet eval set. All models use 10-second input clips with mel spectrogram features.

| Model | mAP | mAUC | Params | Pretraining | Year |

|---|---|---|---|---|---|

| #1 BEATs iter3+ AS2M Microsoft | 0.498 | 0.975 | 90M | AudioSet-2M self-supervised | 2023 |

| #2 AST (AudioSet + ImageNet) MIT/IBM | 0.485 | 0.972 | 87M | ImageNet-21k + AudioSet | 2021 |

| #3 EfficientAT-M2 TU Munich | 0.476 | 0.971 | 30M | ImageNet + AudioSet | 2023 |

| #4 HTS-AT ByteDance | 0.471 | 0.970 | 31M | AudioSet | 2022 |

| #5 CLAP (HTSAT-base) LAION/Microsoft | 0.463 | 0.968 | 86M | LAION-Audio-630K | 2023 |

| #6 PANNs CNN14 ByteDance | 0.431 | 0.963 | 81M | AudioSet from scratch | 2020 |

BEATs iter3+ AS2M

Iterative audio pre-training with discrete tokenization

AST (AudioSet + ImageNet)

First pure attention model for audio, no convolutions

EfficientAT-M2

Best efficiency, real-time capable on edge devices

HTS-AT

Efficient hierarchical structure, good compute/accuracy tradeoff

ESC-50 Benchmark

Environmental Sound Classification

ESC-50 is a simpler, cleaner benchmark: 2,000 5-second clips across 50 environmental sound classes. Single-label classification with 5-fold cross-validation makes it easier to compare models directly.

ESC-50 Class Categories

| Rank | Model | Accuracy (%) | Params | Pretraining | Year |

|---|---|---|---|---|---|

| #1 | BEATs Microsoft | 98.1 | 90M | AudioSet-2M | 2023 |

| #2 | SSAST MIT/IBM | 96.8 | 89M | AudioSet + LibriSpeech | 2022 |

| #3 | CLAP LAION | 96.7 | 86M | LAION-Audio-630K | 2023 |

| #4 | AST MIT/IBM | 95.6 | 87M | ImageNet + AudioSet | 2021 |

| #5 | PANNs CNN14 ByteDance | 94.7 | 81M | AudioSet | 2020 |

| #6 | Wav2Vec 2.0 + Linear Meta | 92.3 | 317M | LibriLight 60k hours | 2020 |

Known AudioSet Issues

- Label Noise: ~30% of labels may be incorrect or incomplete

- Missing Videos: ~20% of YouTube videos no longer available

- Class Imbalance: "Speech" has millions; "Yodeling" has hundreds

- Weak Labels: Labels apply to full 10s clip, not specific moments

- Copyright Issues: Some videos may have been removed for copyright

Best Practices

- Pretraining Matters: ImageNet weights transfer surprisingly well

- Augmentation: SpecAugment, mixup, and time-stretch are essential

- Balanced Sampling: Oversample rare classes during training

- Multi-Scale: Use multiple spectrogram resolutions

- Ensemble: Top results often combine multiple architectures

Related Datasets

AudioSet

20172M+ human-labeled 10-second YouTube video clips covering 632 audio event classes.

ESC-50

20152,000 environmental audio recordings organized into 50 classes (animals, natural soundscapes, etc.).

Contribute to Audio Classification

Have you achieved better results on AudioSet or ESC-50? Benchmarked a new architecture? Help the community by sharing your verified results.